A comparison of web servers for running Chicago Boss

Chicago Boss is a web development framework much like Ruby on Rails but written in Erlang. At Kivra we use Erlang extensibly and continuously evaluate and test new technology. We contribute to a lot of Open Source projects and our main technology interest is massive data at massive scale.

Yaws is currently not supported with Chicago Boss but since we like Yaws and would like to see how well it works with Chicago Boss and in comparison with the other web servers in this test, we’ve done some patching to Chicago Boss to support this. Apparently there’s more to be done for this integration to be perfect as is evidenced by the graphs below.

This comparison is of purely academic purpose too better understand where in the chain certain things break and where we should focus our efforts in achieving even better performance, up-time and scalability. When we choose a web server for our projects we tend to look at a number of factors where speed is but one of them.

Also, bear in mind that the numbers in this test is for the web server being run through simple_bridge and fed into Chicago Boss. Depending on the quality of the simple_bridge implementation for that web server it might yield significant different results, which is evidenced by the raw misultin vs. Chicago Boss+misultin graph below.

Tech:

To be able to run a http load test of somewhat massive scale there’s a bunch of things to tweak to achieve maximum performance. For this test we’ve decided on the bare minimum so standard kernel, scheduling, etc. just changes to open file descriptors and minor changes to the ip stack.

Each connection we make from the client machine requires a file descriptor, and by default this is limited to 1024. To avoid the Too many open files problem you’ll need to modify the ulimit for your shell. We also ended up tweaking the client machine “ipv4/ip_local_port_range” to be able to open as many ports as needed. This can be changed in the current shell on the client machine:

# echo "32768 65535" >/proc/sys/net/ipv4/ip_local_port_range

# cat /proc/sys/net/ipv4/ip_local_port_range

32768 65535

# ulimit -n 65535

# ulimit -n

65535

We’re running httperf which is a tried and proved http load testing tool and we tweak some things before compilation to handle the load.

The server is a 16GB 8CPU machine running Erlang R15B with kernel-poll enabled:

Erlang R15B (erts-5.9) [source] [64-bit] [smp:8:8] [async-threads:0] [hipe] [kernel-poll:true]

We also tweak some parameters on the server machine:

# ulimit -n 65535 # ulimit -n

65535

# sysctl -p

net.core.rmem_max = 33554432

net.core.wmem_max = 33554432

net.ipv4.tcp_rmem = 4096 16384 33554432

net.ipv4.tcp_wmem = 4096 16384 33554432

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_mem = 786432 1048576 26777216

net.ipv4.tcp_max_tw_buckets = 360000

net.core.netdev_max_backlog = 2500

vm.min_free_kbytes = 65536

vm.swappiness = 0

net.ipv4.ip_local_port_range = 1024 65535

net.core.somaxconn = 65535

We’ve used Roberto Ostinelli’s excellent “A comparison between Misultin, Mochiweb, Cowboy, NodeJS and Tornadoweb” as a model to be able to correlate somewhat. There is of course allot of difference between raw web servers like the one in Ostinelli’s test and running ChicagoBoss+ErlyDTL+Simple_Bridge+<web server>. So don’t try to draw too many conclusions if comparing since there’s also other factors that come to play.

We’ve used a simple controller which generates a list of random length(1-20) and passes it to a view for rendering. This way we get to test a normal MVC type pattern with ErlyDTL parsing.

Controller:

-module(loadtest_test_controller, [Req]).

-compile(export_all).

index('GET', []) ->

{A,B,C}=erlang:now(),

random:seed(A,B,C),

R = random:uniform(20),

List = getList(R),

{ok, [{list, List}]}.

getList(R) ->

lists:foldl(fun(N,A) ->

[[{val, N}]|A] end,

[], lists:seq(1,R)).

View:

<html>

<head>

<title>{% block title %}Loadtest{% endblock %}</title>

</head>

<body>

{% block body %}

{% if list %}

{% for l in list %}

-{{ l.val }}<br/>

{% endfor %}

{% else %}

Nope

{% endif %}

{% endblock %}

</body>

</html>For the standalone misultin test we used this:

-module(load).

-export([start/1, stop/0]).

% start misultin http server

start(Port) ->

misultin:start_link([{port, Port}, {loop, fun(Req) -> handle_http(Req) end}]).

% stop misultin

stop() ->

misultin:stop().

% callback on request received

handle_http(Req) ->

{A,B,C}=erlang:now(),

random:seed(A,B,C),

R = random:uniform(20),

List = getList(R),

Req:ok(io_lib:format("<html>~n<head>~n<title>Loadtest</title>~n</head>~n<body>~s</body>~n</htm

l>", [printList(List, [])])).

getList(R) ->

lists:foldl(fun(N,A) ->

[N|A] end,

[], lists:seq(1,R)).

printList([], A) ->

A;

printList([H|T], A) ->

V = lists:flatten(io_lib:format("~p", [H])),

NewAcc = V++A,

printList(T, NewAcc).

Here’s the actual results from the tests:

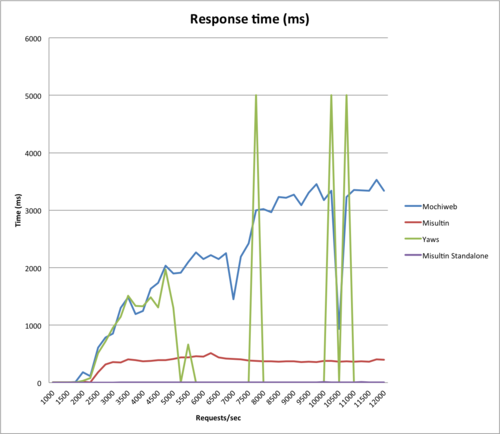

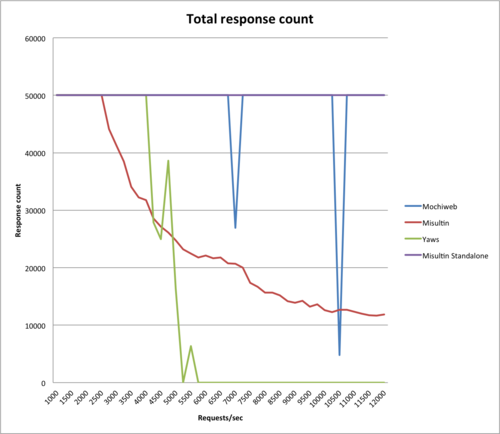

The ideal graph would be linear from 1000 response/sec (start) to 12000 response/sec (end). Which is basically what we get when running misultin standalone.

Here we see that all web servers top out around 2000 res/sec. There’s clearly something strange with Yaws since it dies and start generating error which is seen in the graphs below. Probably something in the simple_bridge API bridging Chicago Boss <> Yaws since we know from experience that Yaws standalone is a rock solid web server.

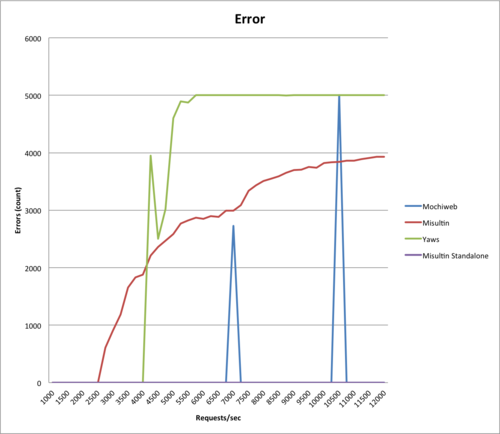

This is an aggregated view of all the errors that occurred. In the case of misultin we started getting “connection reset” errors after a while. Yaws gave us “connection timeout” which means it doesn’t respond within the given five seconds. Mochiweb is pushing along nicely with two spikes that account for any errors given.

This is the average response time in ms for a request. The Y scale is O(log n) which means that there’s a certain overhead (such as setting up sessions, etc) when serving a request but that overhead doesn’t grow. This is good, you want a O(log n) or O(1) here.

This graph is basically the reverse of the error graph showing at how much req/sec each web server starts to crumble under the load and start generating errors.

It’s been great fun performing these tests and now comes the really fun part of using this insights and try to create something that performs even better. Misultin standalone is really the school book example of how a web server should perform. Since we don’t get those numbers when running with Chicago Boss there’s clearly a great deal of potential here.

We used misultin standalone as a reference and could just as easily have used Yaws or Mochiweb and they would probably have performed equally well. But this test wasn’t about which web server performs best but how good they perform under Chicago Boss which as we see from the tests are a whole other thing.

Hope you enjoyed this!